一、基于containerd的1.27.4 K8S集群安装

1.1、hosts

192.10.192.16 k-master1

192.10.192.30 k-node1

192.10.192.31 k-node2

…

1.2、系统初始化

##修改时区,同步时间

yum install chrony -y

vim /etc/chrony.conf

—–

ntpdate ntp1.aliyun.com iburst

—–

ln-sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo’Asia/Shanghai’ > /etc/timezone

##关闭防火墙,selinux

systemctlstopfirewalld

systemctl disable firewalld

sed-i’s/enforcing/disabled/’ /etc/selinux/config

setenforce 0

##关闭swap

swapoff-a

sed-ri’s/.*swap.*/#&/’ /etc/fstab

##系统优化

cat > /etc/sysctl.d/k8s_better.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

# modprobe br_netfilter

# lsmod |grep conntrack

# modprobe ip_conntrack

# sysctl -p /etc/sysctl.d/k8s_better.conf

net.bridge.bridge-nf-call-iptables =1

net.bridge.bridge-nf-call-ip6tables =1

net.ipv4.ip_forward =1

vm.swappiness =0

vm.overcommit_memory =1

vm.panic_on_oom =0

fs.inotify.max_user_instances =8192

fs.inotify.max_user_watches =1048576

fs.file-max =52706963

fs.nr_open =52706963

net.ipv6.conf.all.disable_ipv6 =1

net.netfilter.nf_conntrack_max =2310720

##确保每台机器的uuid不一致,如果是克隆机器,修改网卡配置文件删除uuid那一行

cat /sys/class/dmi/id/product_uuid

1.3、安装ipvs 转发支持 【所有节点】

###系统依赖包

# yum install -y conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

###开启ipvs 转发

# modprobe br_netfilter

vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe–ip_vs

modprobe–ip_vs_rr

modprobe–ip_vs_wrr

modprobe–ip_vs_sh

modprobe–nf_conntrack

# chmod 755 /etc/sysconfig/modules/ipvs.modules

# bash /etc/sysconfig/modules/ipvs.modules

# lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack_ipv4 15053 0

nf_defrag_ipv4 12729 1nf_conntrack_ipv4

nf_conntrack 139264 2ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3xfs,ip_vs,nf_conntrack

1.4、安装containerd 【所有节点】

##创建 /etc/modules-load.d/containerd.conf 配置文件:

cat << EOF > /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

##获取阿里云YUM源

wget-O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

##下载安装:

yum install-ycontainerd.io

##生成containerd的配置文件

mkdir /etc/containerd -p

##生成配置文件

containerd config default > /etc/containerd/config.toml

##编辑配置文件

vim /etc/containerd/config.toml

—–

SystemdCgroup=false改为 SystemdCgroup=true

# sandbox_image = “k8s.gcr.io/pause:3.6”

改为:

sandbox_image =”registry.aliyuncs.com/google_containers/pause:3.9″

——

# systemctl enable containerd

Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service.

# systemctl start containerd

二、安装 k8s1.27.x

2.1、配置k8s1.27.4的 yum 源

##添加阿里云YUM软件源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache

## 查看所有的可用版本

yum list kubelet–showduplicates|sort-r|grep1.27

2.2、安装kubeadm,kubectl 和 kubelet

##目前最新版本是1.27.4,我们直接上最新版

yum install-ykubectl-1.27.4-0 kubelet-1.27.4-0 kubeadm-1.27.4-0

##为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。

# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=”–cgroup-driver=systemd”

##设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动

# systemctl enable kubelet

##准备k8s1.27.4 所需要的镜像

kubeadm config images list–kubernetes-version=v1.27.4

##设置crictl连接 containerd

# crictl config –set runtime-endpoint=unix:///run/containerd/containerd.sock

# crictl images ls

##集群初始化(master上执行)

使用kubeadm init命令初始化

在master上执行,报错请看k8s报错汇总

kubeadm init–kubernetes-version=v1.27.4–pod-network-cidr=10.224.0.0/16–apiserver-advertise-address=192.10.192.16–image-repositoryregistry.aliyuncs.com/google_containers

–apiserver-advertise-address 集群apiServer地址(master节点IP即可)

–image-repository 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

–kubernetes-version K8s版本,与上面安装的一致

–service-cidr 集群内部虚拟网络,Pod统一访问入口

–pod-network-cidr Pod网络,,与下面部署的CNI网络组件yaml中保持一致

成功后显示:

Your Kubernetes control-plane has initialized successfully!

Tostartusing your cluster, you need to run the following as a regular user:

mkdir-p$HOME/.kube

sudocp-i/etc/kubernetes/admin.conf$HOME/.kube/config

sudochown$(id -u):$(id -g)$HOME/.kube/config

Alternatively,ifyou are the root user, you can run:

exportKUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run”kubectl apply -f [podnetwork].yaml”with one of the options listed at:

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join192.10.192.16:6443–token39ab1o.xqzg5i93jrjtr7ag \

–discovery-token-ca-cert-hash sha256:79ac6baa98f4b8421ea17b307e34e7a3b62de62f0a6e5380d02a8561bf76f920

k-node1 和 k-node2 上执行:

[root@node1 ~]# kubeadm join 192.10.192.16:6443 –token 39ab1o.xqzg5i93jrjtr7ag \

> –discovery-token-ca-cert-hash sha256:79ac6baa98f4b8421ea17b307e34e7a3b62de62f0a6e5380d02a8561bf76f920

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster…

[preflight] FYI: You can look at this config file with ‘kubectl -n kube-system get cm kubeadm-config -o yaml’

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap…

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run ‘kubectl get nodes’ on the control-plane to see this node join the cluster.

查看集群节点(master):

[root@master1 ~]# mkdir -p $HOME/.kube

[root@master1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@master1 ~]# ls /root/.kube/

config

# export KUBECONFIG=/etc/kubernetes/admin.conf

# kubectl get nodes

# kubectl get pods -n kube-system

2.3、集群部署网络插件

网络组件有很多种,只需要部署其中一个即可,推荐Calico。

Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。

Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。

此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。

1.下载Calico

# wget –no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

# vim calico.yaml

…

– name: CALICO_IPV4POOL_CIDR

value: “10.244.0.0/16”

…

# kubectl create -f calico.yaml

2.4、测试验证

[root@k-master1 ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.10.192.16:6443

CoreDNS is running at https://192.10.192.16:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use’kubectl cluster-info dump’.

[root@k-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k-master1 Ready control-plane 34m v1.27.4

k-node1 Ready <none> 24m v1.27.4

k-node2 Ready <none> 24m v1.27.4

[root@k-master1 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6c99c8747f-mx2gl 1/1 Running 0 12m

kube-system calico-node-h5hbb 1/1 Running 0 12m

kube-system calico-node-hx6pz 1/1 Running 0 12m

kube-system calico-node-vsxrw 1/1 Running 0 12m

kube-system coredns-7bdc4cb885-4hrhm 1/1 Running 0 34m

kube-system coredns-7bdc4cb885-8wk52 1/1 Running 0 34m

kube-system etcd-k-master1 1/1 Running 0 34m

kube-system kube-apiserver-k-master1 1/1 Running 0 34m

kube-system kube-controller-manager-k-master1 1/1 Running 0 34m

kube-system kube-proxy-9qj6m 1/1 Running 0 24m

kube-system kube-proxy-k9wdm 1/1 Running 0 24m

kube-system kube-proxy-nsbft 1/1 Running 0 34m

kube-system kube-scheduler-k-master1 1/1 Running 0 34m

三、containerd常用命令

# 查看镜像

ctr image list

或者

crictl images

# 拉取镜像

ctr i pull–all-platformsregistry.xxxxx/pause:3.2

或者

ctr i pull–useruser:passwd–all-platformsregistry.xxx.xx/pause:3.2

或者

crictl pull–credsuser:passwd registry.xxx.xx/pause:3.2

# 镜像打tag

ctr-nk8s.io i tag registry.xxxxx/pause:3.2 k8s.gcr.io/pause:3.2

或者

ctr-nk8s.io i tag–forceregistry.xxxxx/pause:3.2 k8s.gcr.io/pause:3.2

# 删除镜像tag

ctr-nk8s.io irmregistry.xxxxx/pause:3.2

# 推送镜像

ctr images push–useruser:passwd registry.xxxxx/pause:3.2

四、关于 docker 及 containerd

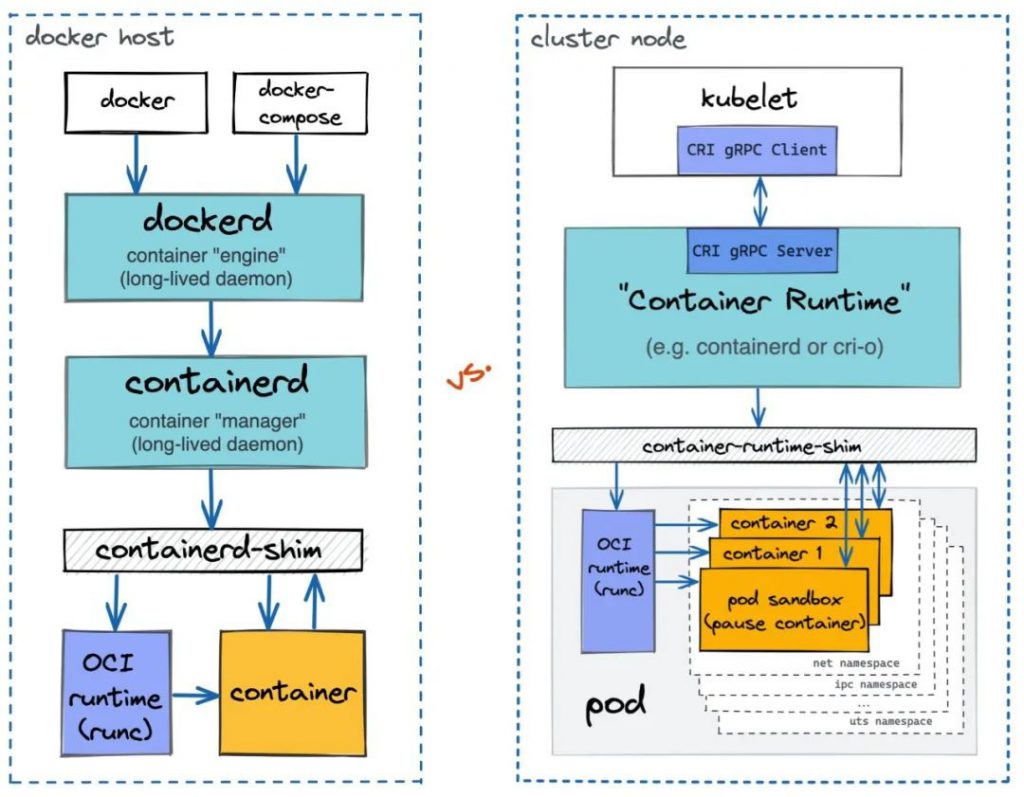

containerd是一个高级容器运行时,又名容器管理器。简而言之,它是一个守护进程,在单个主机上管理整个容器生命周期:创建、启动、停止容器、拉取和存储映像、配置装载、网络等。

containerd被设计为易于嵌入到更大的系统中。Docker在引擎盖下使用containerd来运行容器。Kubernetes可以通过CRI使用containerd来管理单个节点上的容器。

Kubernetes在v1.24版本开始移除了docker-shim,而Docker Engine默认又不支持CRI规范,因而二者将无法直接完成整合,也就是说从v1.24版本开始,kubernetes不在原生支持docker容器运行时,但如果非要使用docker作为容器运行时,也不是不可以,只是需要再额外安装cri-dockerd为Docker Engine提供一个能够支持到CRI规范的垫片,从而能够让Kubernetes基于CRI控制Docker。